Meeting people IRL has become increasingly challenging, leading to a surge in reliance on dating apps. However, the prevalent reliance on algorithms in these apps mirrors the engagement-driven business models of social media platforms governed by the principles of surveillance capitalism and attention economy.

Extensive investigations by journalists worldwide, such as this famous 2 years investigation by French journalist Judith Duportal, have unveiled disconcerting practices within dating app algorithms. A prime example is Tinder, this platform teeming with user data that intelligently identifies potential matches. Yet, deliberately obscured matching profiles are strategically withheld to prolong user engagement. Matches materialize randomly, mirroring the unpredictability of gambling mechanics, fostering user frustration as a catalyst for subscription upgrades or profile boosting to enhance visibility and access to other profiles.

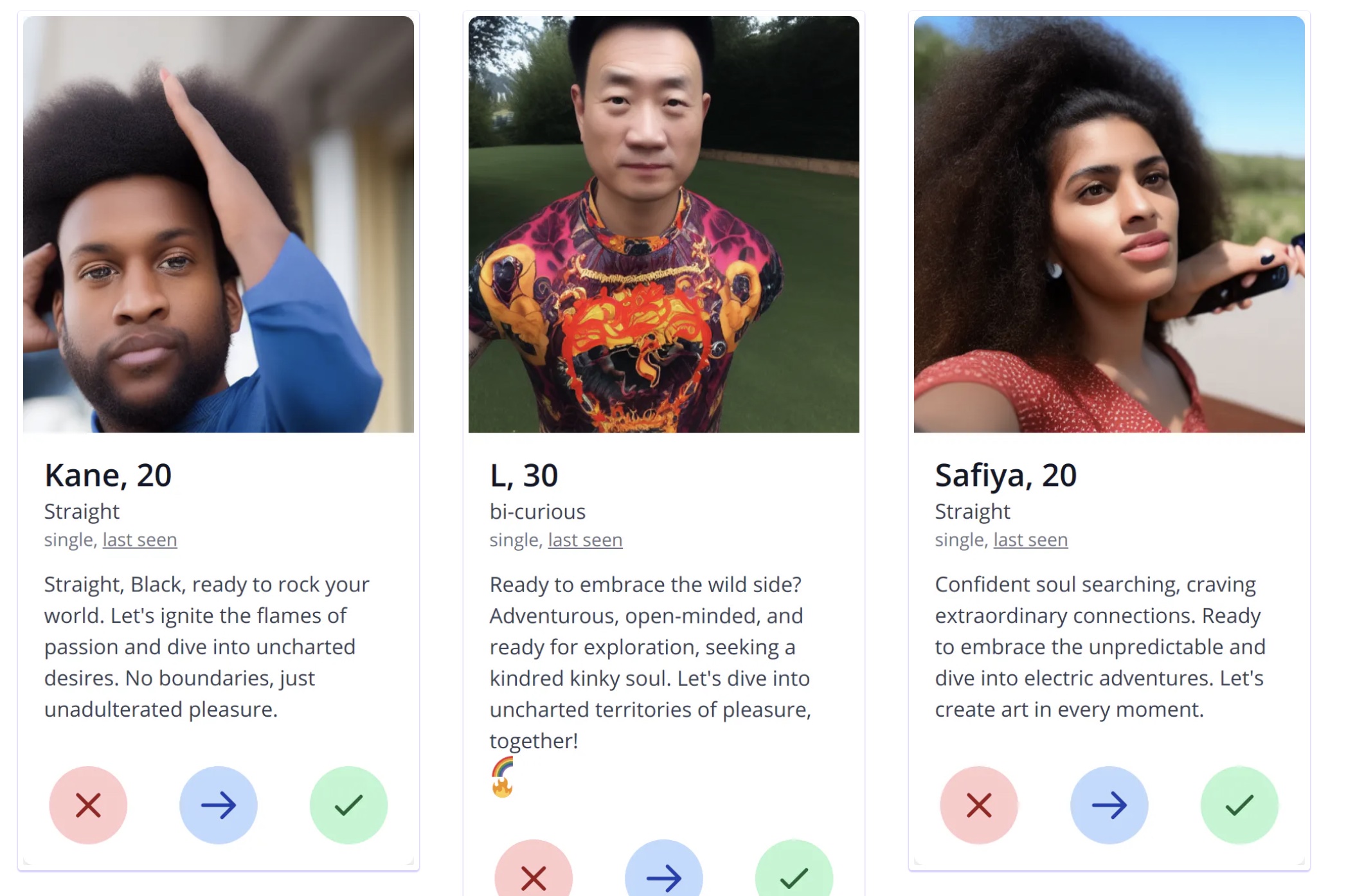

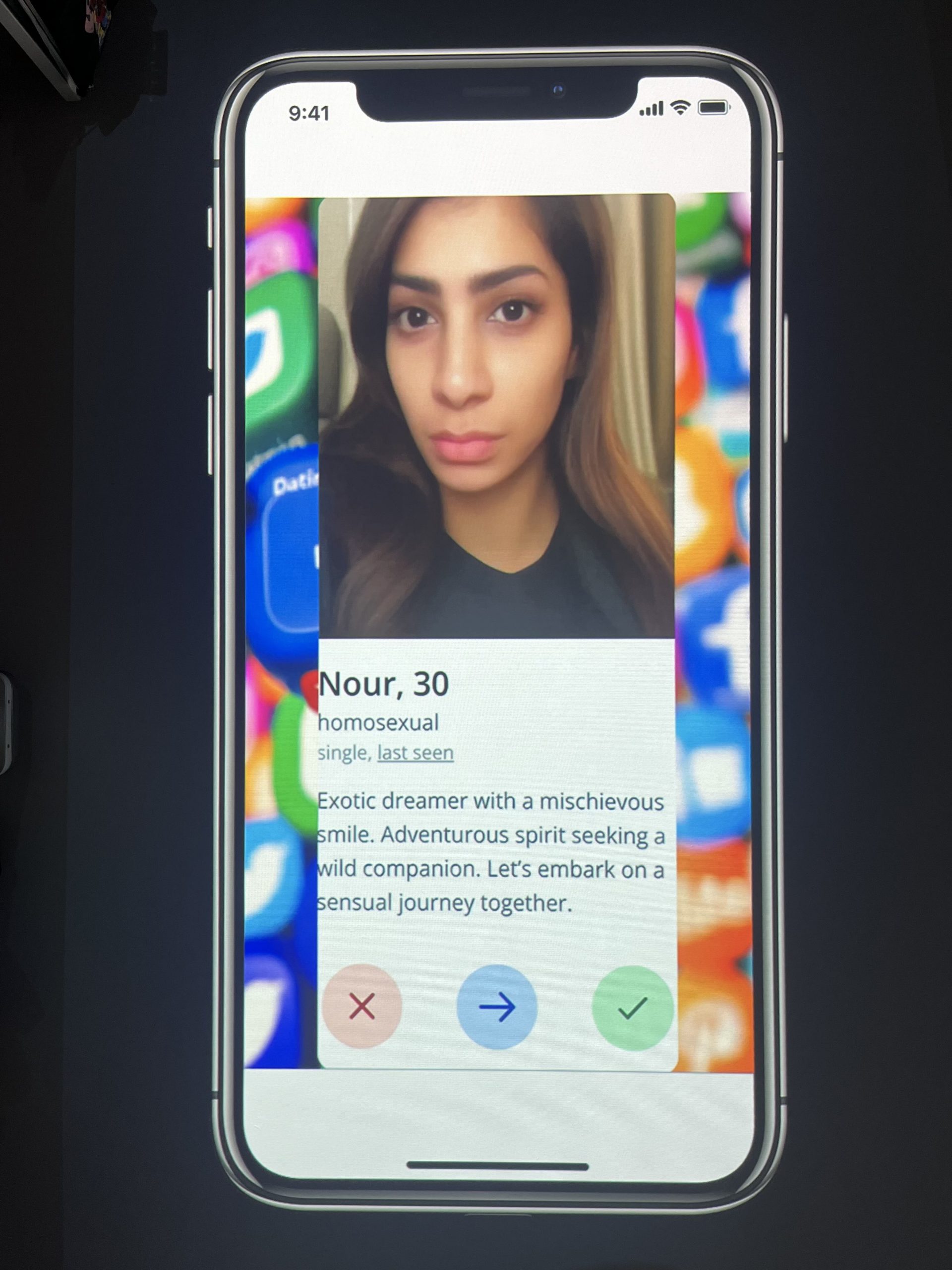

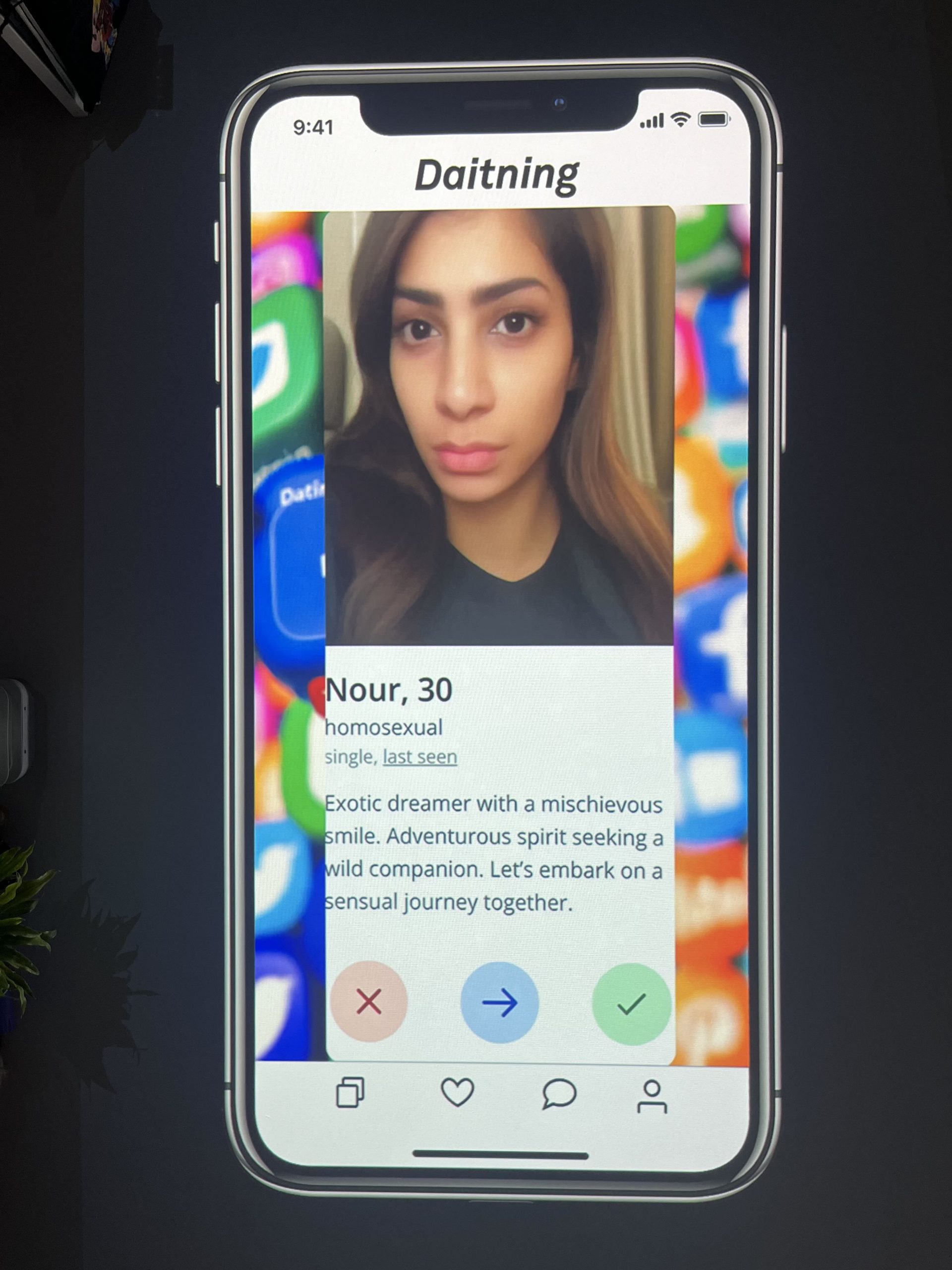

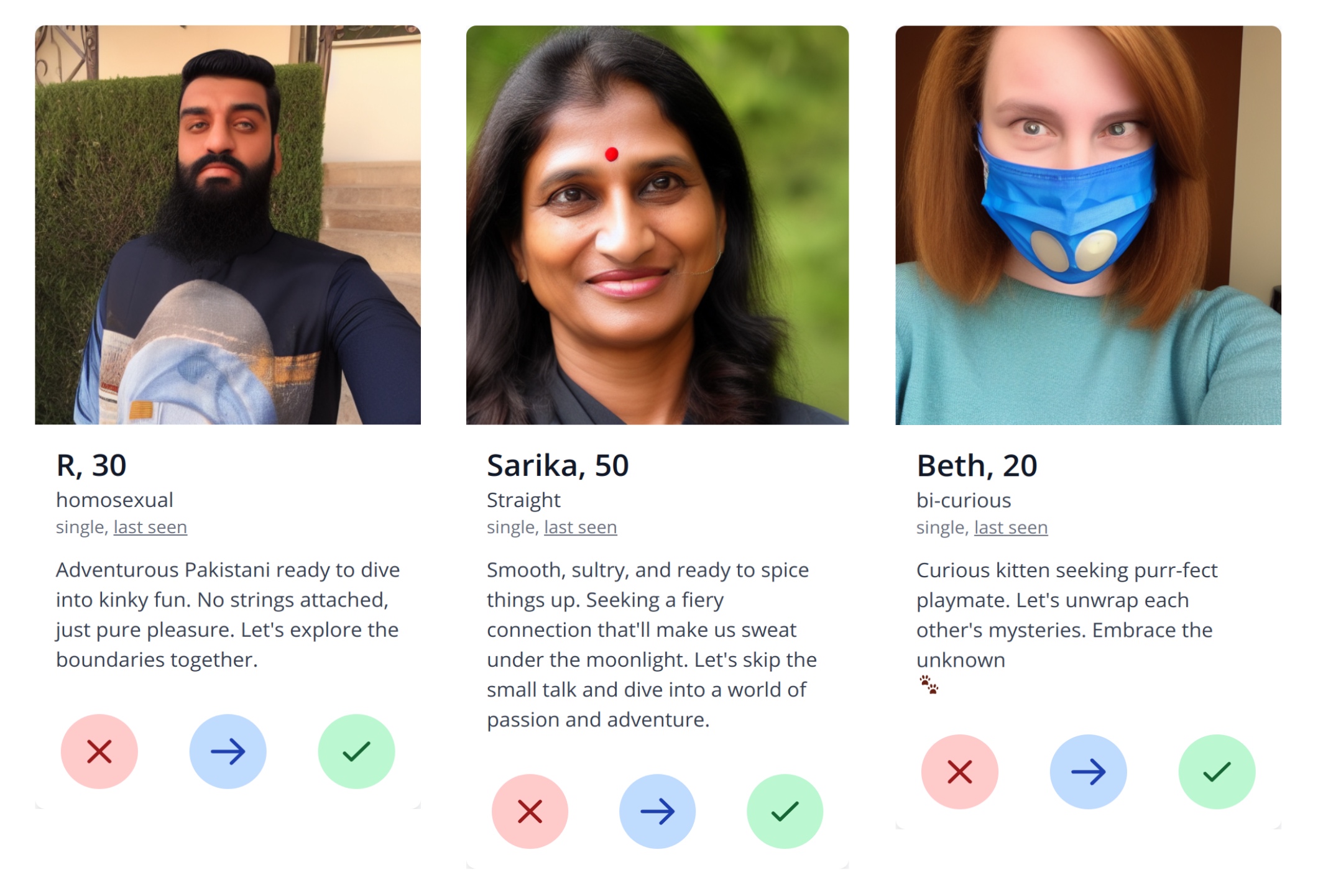

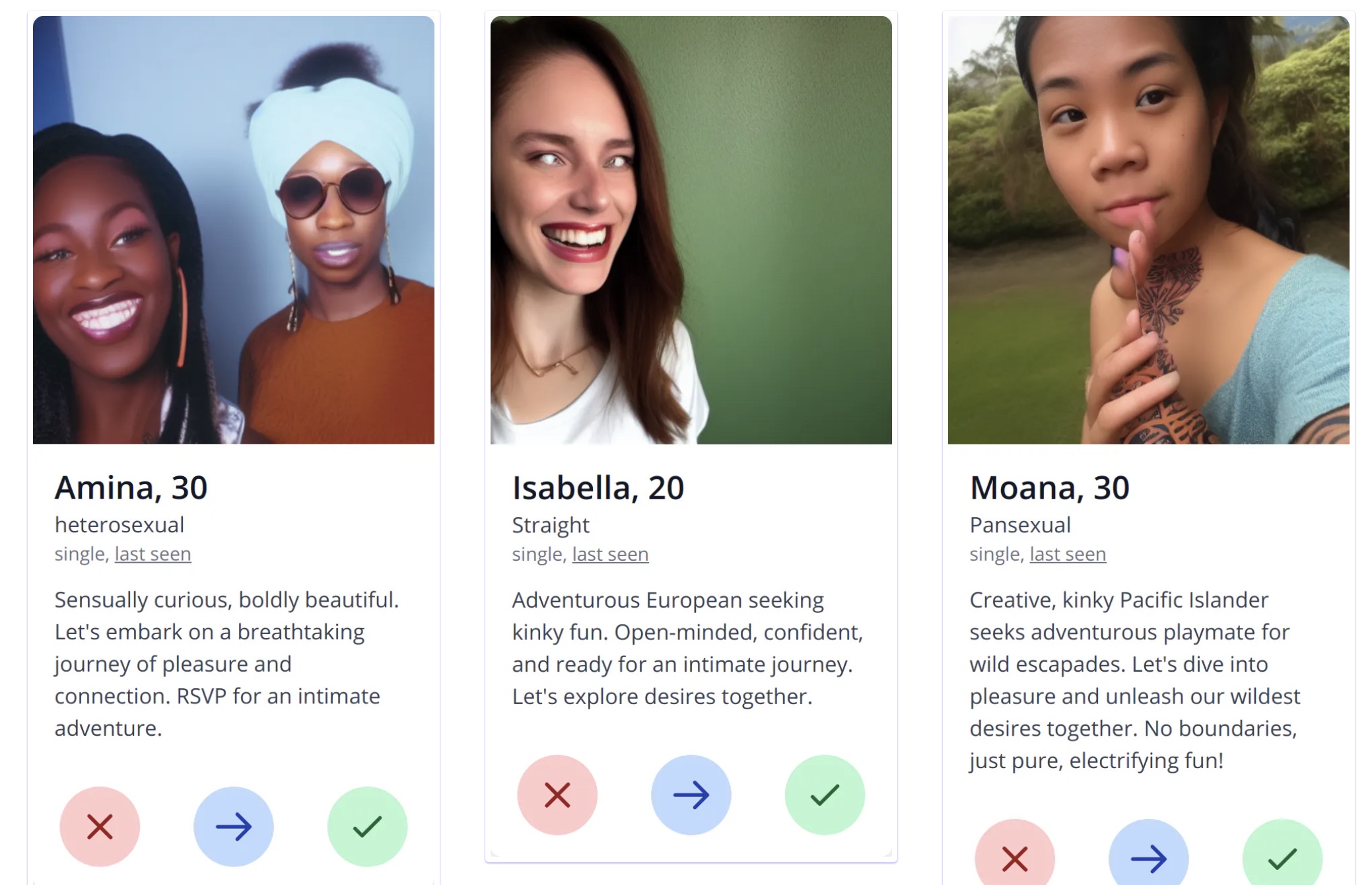

These dating apps algorithms pretend to be capable of understanding well who we are and what we want as individuals and this is what compelled the artist Cadie Desbiens-Desmeules to invert the power dynamics by questioning what algorithms truly know about us: “does it match ?” Unmatched” investigates the phenomenon of algorithmic discrimination through the lens of dating applications. The installation offers insights into the inherent biases ingrained in the datasets underpinning models like LAION and Common Crawl, which are widely employed in training most image and large language models such as GPT and DALLE. These biases particularly affect the representation of non-heteronormative sexual orientations, perpetuating stereotypical depictions and undeniable cultural biases. In this art experiment, Cadie Desbiens-Desmeules collaborated with Glif, a web-based AI generator platform, to develop an app that creates entirely fictional AI profiles. Instead of relying on real profiles, this playful approach uses GPT-4 and Vision to craft entirely new personas within the parameters defined by existing dating apps. The work explores how much current large language models understand our cultures, people, and the entire dating world in general. Interestingly, it also highlights how AI does not necessarily grasp cultural differences accurately. Some descriptions do not align with the profiles, even when GPT-4 is instructed to use Vision to ensure the description matches the image, cultural origin, gender, and sexual orientation. This reveals that certain identities and contexts are misunderstood by current LLMs.

The artwork demonstrates the ethical concerns surrounding algorithmic discrimination, especially when machine learning models may be statistically accurate yet still morally concerning because they reflect or perpetuate discrimination. Whether direct or indirect, algorithmic discrimination can be very harmful to individuals, and due to algorithmic opacity, it might occur without our knowledge. The risk of compounding or exacerbating existing or past injustices is very high, particularly with AI systems that fall under ‘automation’ or ‘discriminative AI.’ Discriminative machine learning has been widely used over the past decade in digital contexts, such as decision-making by classification or identifying patterns in data—for instance, algorithmic filtering in social media platforms, targeted advertising, and surveillance. However, as the artist’s experiment shows, algorithmic biases also exist in generative AI, highlighting the need for a more comprehensive understanding and ethical consideration in the development of AI technologies.

Images generated with Stable Diffusion (not with Glif.app) using real dating profile descriptions as prompts.

These revelations raise concerns about the datasets underpinning the training of large language models, image models but also algorithms in general as most of them are supported by these common datasets derived from internet scraping. Many academic research have exposed not only that AI encourages mainstream culture but furthermore, that these datasets are full of undesirable content including hate speech, explicit material, and harmful stereotypes such as racism, even after filtering procedures.

The artist experiment is a demonstration of the issues in which algorithmic discrimination can occur with machine learning. We’re talking about ethical concerning bias when a model might be statistically accurate but yet still morally concerning, because it reflects or perpetuates discrimination. Algorithmic discrimination, no mater if it is direct or indirect can be very harmful for certain individuals. Due to algorithmic opacity, algorithmic discrimination might sometimes be occurring without our knowledge. The risk of compounding or exacerbating existing injustices or past injustices is very high especially with the kinds of AI that fall under ‘automation’ or ‘discrimative AI’. Discriminative machine learning is the kind of AI that has been massively used in the past decade in our digital lives, for example in decision making by classification or identification patterns in data such as algorithmic filtering in social media platforms, targeted advertising, surveillance and so forth. However, algorithmic biases also exists in generative AI as the artist experiment exposes.

++++ INTERACTIVE DATING APP – CLICK GLIF IT ++

Programming with Glif.app

++++ INTERACTIVE DATING APP – CLICK GLIF IT ++++

This art project serves as a thought-provoking commentary on the unintended consequences and biases inherent in the algorithms shaping our digital interactions, prompting a critical examination of the ethical dimensions of algorithmic matchmaking in the realm of online dating.

Cadie Desbiens-Desmeules. 2024.

Project is ongoing : Work-in-progress